Blocked Tweets Raise Free Speech Concerns

Weekly Editorial — Posted on January 30, 2020

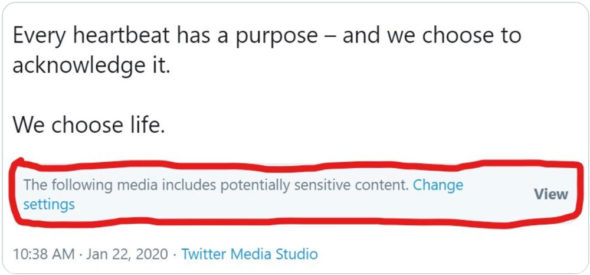

(by Kalev Leetaru, RealClearPolitics) — In the lead-up to last week’s March for Life rally, Twitter generated controversy for censoring at least two different pro-life tweets, including one by Rep. Matt Gaetz, masking them from view with a “sensitive content” warning.

After the ensuing outcry, the company followed the now-standard playbook of blaming algorithmic “error” and removed the warnings. Still, Twitter refuses to provide any additional detail about how the error occurred.

See Rep. Gaetz’s tweet which was censored by Twitter: twitter.com/RepMattGaetz/status/1220007962653143040.

As social media platforms increasingly become the “public spaces” through which democratic debate occurs, the errors and inadvertent biases of their moderation black boxes* will have an ever-growing impact on democratic debate. [*In science, computing, and engineering, a black box is a device, system or object which can be viewed in terms of its inputs and outputs (or transfer characteristics), without any knowledge of its internal workings.]

We live in a world today in which Twitter openly proclaims its right to delete official presidential tweets with which it disagrees. Facebook blocks nationally elected political parties it deems “hateful” and has gone as far as quietly intervening in a national election to subject posts supporting one party to special scrutiny.

A small number of private companies now have the ultimate authority over what we are permitted to see and say online and can intervene at will in political speech, deeming any viewpoint they disagree with as “unacceptable” and deleting all mentions of it.

In the physical world, any voice, no matter how marginalized or how hateful, can speak freely in a public space, a legacy of the Founders’ belief that the free flow of ideas forms the bedrock of democracy.

Yet the First Amendment does not apply in cyberspace. The digital “public spaces” in which our societal debates occur are in fact all private property, owned by a small cadre of ideologically homogeneous Silicon Valley founders that are free to set their own rules for discourse within their walled gardens.

As societies around the world become increasingly diverse and divergent in their beliefs, it becomes ever more difficult to devise a single set of speech rules with which all of society can agree. Is curbing illegal immigration a legitimate policy question or should it be prohibited as “hate speech”?

Behind all these actions are armies of human and algorithmic moderators policing the global conversation, their instructions secret and their decisions final. When they make mistakes or their decisions generate controversy, companies are under no obligation to provide the public further detail about what specifically happened.

In the case of the March for Life tweets, a Twitter spokesperson confirmed that algorithmic error led to the tweets being flagged as sensitive content but declined to provide any further information. Asked why the company believed the public was not entitled to more information on what specifically about the tweets triggered the algorithm, the spokesperson said the company had no further comment.

Similarly, when asked last year why Facebook believed it was acceptable for it to determine what kinds of political speech and beliefs were permissible on its platform, rather than leaving those decisions to the courts and democratically elected governments, or to provide more detail about its mistakes, the company offered that “they’re definitely important questions” but that it had no further comment.

Despite their veneer of impartiality, algorithms are devised by humans who build their own biases and beliefs into their creation.

Could the March for Life tweets have been flagged by an algorithm that was deliberately or inadvertently trained to recognize anti-abortion [pro-life] speech as sensitive speech?

What other topics are just a tweet away from being incorrectly censored? Without greater transparency from Silicon Valley we simply have no idea.

Most importantly, how do we ensure ideological diversity in these moderation systems, especially when their creators tend to skew heavily towards one side of the political spectrum?

In the end, as a handful of social platforms increasingly become the de facto public squares through which societies debate their most important issues, the free flow of information that underpins democracy itself is at times blocked by unelected and unaccountable censors enforcing their own beliefs on all of society. Orwell would be proud.

RealClear Media Fellow Kalev Leetaru is a senior fellow at the George Washington University Center for Cyber & Homeland Security. His past roles include fellow in residence at Georgetown University’s Edmund A. Walsh School of Foreign Service and member of the World Economic Forum’s Global Agenda Council on the Future of Government.

Questions

1. The purpose of an editorial/commentary is to explain, persuade, warn, criticize, entertain, praise or answer. What do you think is the purpose of Kalev Leetaru’s editorial? Explain your answer.

2. In this commentary, Mr. Leetaru makes the following points:

- "A small number of private companies now have the ultimate authority over what we are permitted to see and say online and can intervene at will in political speech, deeming any viewpoint they disagree with as 'unacceptable' and deleting all mentions of it."

- "Behind all these actions are armies of human and algorithmic moderators policing the global conversation, their instructions secret and their decisions final. When they make mistakes or their decisions generate controversy, companies are under no obligation to provide the public further detail about what specifically happened."

- "In the end, as a handful of social platforms increasingly become the de facto public squares through which societies debate their most important issues, the free flow of information that underpins democracy itself is at times blocked by unelected and unaccountable censors enforcing their own beliefs on all of society."

What should/can be done about this? Explain your answer.

3. Following Twitter’s censoring of Rep. Gaetz’s original pro-life post, Gaetz tweeted:

Wednesday: @Twitter censors our pro-life video as "sensitive content."

Today: @Twitter censors ANOTHER pro-life video our office created AND censors @TeamTrump's pro-life video.

This is unacceptable. We need answers.

Twitter user @SharinStone commented on the post:

"I have one....Go start your own social media platform."

a) This is a rude remark about a legitimate concern. Why is this "suggestion" so ridiculous?

b) Obviously this twitter user is a progressive. Do you think most progressives agree with this user’s comment? Explain your answer.